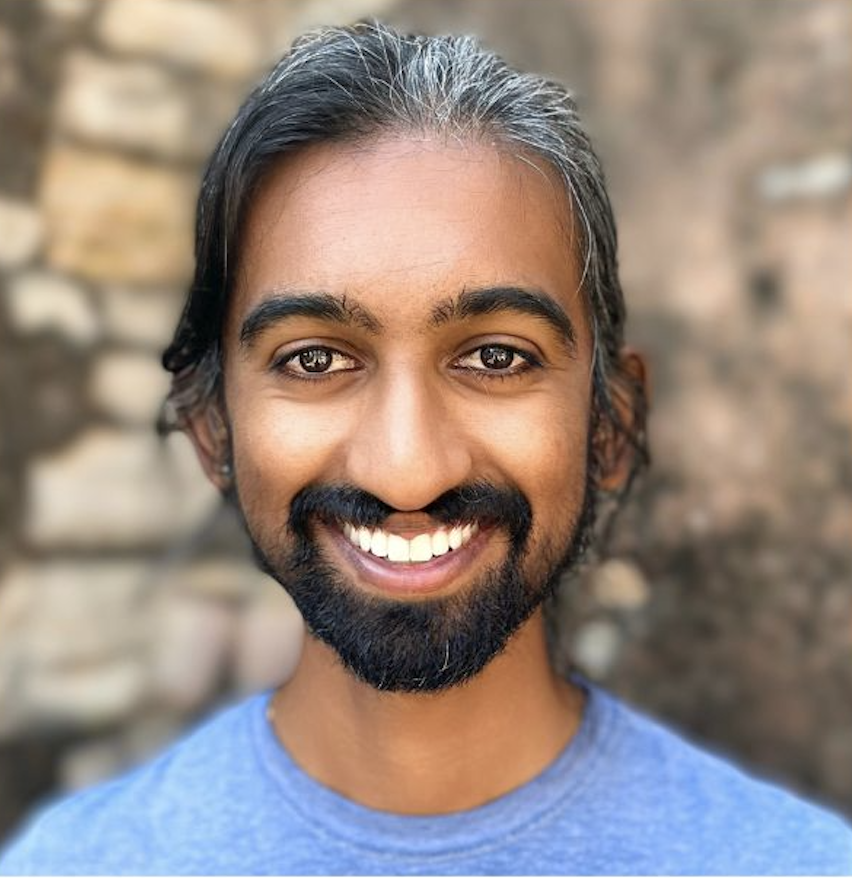

I am a member of the technical staff at Anthropic, on the pretraining team. Previously, I was a senior research scientist on the Llama team at Meta GenAI. I received my PhD in Computer Science at the University of Washington. During my graduate studies, I was supported by the 2022 Bloomberg PhD Fellowship, was a visiting researcher at FAIR, and was a predoctoral resident at AI2.